Table of Contents

A few months ago, my team had to build a new service. One of the goals was to gather and centralize data from a high-usage “processing” service currently spawned across tens of servers, in EU and America, processing 10k+ jobs per minute overall. At the time, it was extremely difficult to get a good overview of the job failures and investigate them as the data was decentralized. So we needed a SQL database with strong scalability, and the team was mostly used to PostgreSQL with Python Django for similar projects.

When designing this new service, our infrastructure team recommended that we try CockroachDB, as we had access to a CockroachDB cluster and as they were not sure they would have the capacity to easily provide a PostgreSQL able to fit our needs with replicas and sharding. Note that this CockroachDB cluster is co-located, hence we don’t leverage geo-distributions capabilities of CockroachDB. So we decided to look into building a Python Django service relying on CockroachDB. After a few months of developments and having the service in production for a while, I wanted to share a few feedbacks and discoveries we made along the road.

What is CockroachDB?

CockroachDB is a SQL database system, designed specifically and from the start to be distributed, while supporting ACID transactions and providing consistency. Therefore, it aims at addressing the common issue of database scalability in the realm of distributed systems and micro-services. Its name comes from the resilience and adaptability of cockroaches as the horizontal scalability mechanism of this database make it fault-tolerant. Personally, as a user, one of the biggest advantage of CockroachDB is that it supports PostgreSQL wire protocol, making it practically compatible with many frameworks and tools off-the-shelf (or almost… as we will discuss), such as the ones in our stack: Python Django & Metabase. More details can be found on Cockroach Labs’ website.

Prototype working out-of-the-box

So the team starts working on setting up a basic service in Django using CockroachDB, with a few models and endpoints interacting with those models. So far, it works just like any Django service using PostgreSQL: great! The only specific configuration we had to do was to use django-cockroachdb as backend for Django.

One of the goals for this new service was business intelligence and data analytics, remember? So we rapidly wanted to confirm we could use our existing tool for this: Metabase. There is no official support of CockroachDB in Metabase. However, CockroachDB supports PostgreSQL wire protocol, so Metabase can actually connect to the database as if it was a PostgreSQL database, and it works!

So quickly enough, we were able to creates a few objects in our Django models, and explore this data through Metabase. This was very promising and we decided to move forward toward production.

Scaling to production: INSERT and SELECT like a charm

A few weeks later, a first version of the new service is ready for production. Our instances from the “processing” service started sending data from the results of the jobs they processed to our new service, so that valuable data is centralized and we can start learning from it!

At this stage, our new service simply inserts data into several tables (thousands of records per minute), and batch-deletes outdated data with a CRON job. We then query the data through Metabase for analysis.

Our CockroachDB clusters has about a dozen of servers. From the get-go, we could see the load of insert operations spreaded across the different servers: horizontal scalability was properly handled without any additional efforts!

To ease our BI queries, we prepared indexes on created_at fields (if that is not a habit, read my article on the topic) with the index creation on Django Models, it works well. Querying on data created a few hours ago is well handled, and response time is totally acceptable. We noted more latency in our SQL queries when trying to select a more recent range, probably because this is a hotspot of the index, being actively written at. CockroachDB documentation mentions this issue and possible fixes:

– Using hash-sharded index for sequential indexes (such as created_at) ; but it is not clear how smoothly this could be used with Django ORM.

– Using AS OF SYSTEM TIME statement with a timestamp slightly in the past to avoid collision with on-going and recent insert: it is working fine through Metabase and perfectly fits our needs as we don’t need completely real-time data.

Scaling to production: the bottleneck of deleting old data

So the service runs well in production and we start gathering data, so far so good! And the efforts of getting used to CockroachDB have been minimal so far! But soon enough, we get our first CRON jobs responsible for deleting old data that start lagging behind, and failing in some cases.

We can’t reproduce the issue on our staging environment, but this one as way less rows in database than production… So we add a few logs and the conclusion is that DELETE queries are taking a lot of time, eventually timing out or returning errors like RETRY_SERIALIZABLE.

A look into the documentation mentions the performance issues of deletions and suggests several solutions to bulk-delete data. We adapted our CRON job to perform deletions by smaller batches and iterating over them, but only got mixed results: it worked well for a while but every now and then, slowdowns came back in the deletion process.

So we decided to try the built-in row-level TTL mechanism. This is a behavior not handled at Django level, so it needs to write a custom Django migration. It took some tries to get it right, especially because CockroachDB does not accept table alteration in transactions because rollback is not supported ; hence the manipulation of the schema_editor. Here is an example of a Django migration to add row-level TTL to a CockroachDB table:

from django.db import migrations

def forwards(apps, schema_editor):

def f(name, schema_editor):

if not schema_editor.connection.vendor == 'cockroachdb':

print(f"Not applying TTL to the database as it is not cockroachdb but {schema_editor.connection.vendor} instead.\n")

return

# Make sure not to be in atomic mode, as CockroachDB does not accept

# the table alteration in a transaction (no rollback possible)

in_atomic_block = schema_editor.connection.in_atomic_block

schema_editor.connection.in_atomic_block = False

set_argument = "SET (ttl_expiration_expression = '((created_at AT TIME ZONE ''UTC'') + INTERVAL ''2 days'')"\

"AT TIME ZONE ''UTC''', ttl_job_cron ='* * * * *');"

try:

schema_editor.execute("ALTER TABLE " + name + " " + set_argument)

print(f"Applied TTL to {name}.\n")

finally:

schema_editor.connection.in_atomic_block = in_atomic_block

f("my_table", schema_editor)

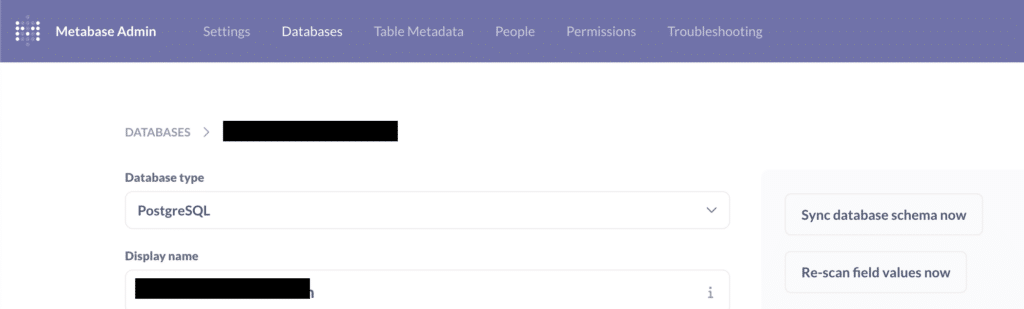

returnTo apply this process to all our tables, we had to add a created_at column to some of them, which did not have it originally, so that we could base the TTL on this column. So that is another Django migration, but an easy one this time. Now, we just have to tell Metabase to update the table model through the admin panel ; easy right? Right? Well, it turns out we never managed to make Metabase updates the table models of the CockroachDB database, when clicking “Sync database schema now” even though Metabase logs showed the operation as successful. It works well with PostgreSQL so maybe this is some incompatibility with CockroachDB? To work around this, a manual update of Metabase database did the trick.

Speeding up the deletion process with missing indexes

Finally, the we were able to see the row-level TTL mechanism do its magic. But it was still a bit slow, and was still outpaced by our INSERT rate. After digging a bit, we realized that using ForeignKeys in Django model with the db_index=True directive was not working as expected! Our model Job referenced another model Result:

job_result_id = models.ForeignKey(JobResult,on_delete=models.SET_NULL,db_index=True, null=True)But looking at the SHOW CREATE TABLE for the Job model:

- A foreign key constraint was properly applied.

- No index was created on the result_id column.

(...

CONSTRAINT job_result_id_ef980122_fk_jobresult_uuid FOREIGN KEY (result_id) REFERENCES public.jobresult(uuid),

INDEX "job_createdAt_565936c3" (created_at ASC),

)

WITH (ttl = 'on', ttl_expiration_expression = e'((created_at AT TIME ZONE \'UTC\') + INTERVAL \'7 days\')AT TIME ZONE \'UTC\'', ttl_job_cron = '* * * * *')As the JobResult table kept growing, the search to ensure the constraint on Job table is met when deleting a JobResult was taking longer because the whole JobResult table had to be parsed. To work around this, we had to manually add the index we originally wanted as the db_index directive seems not to work as expected with ForeignKeys on CockroachDB. Note that db_index worked well for the created_at column.

from django.db import migrations, models

class Migration(migrations.Migration):

dependencies = [

('saas', '9999_add_ttl'),

]

operations = [

migrations.RunSQL(

"CREATE INDEX i_saas_result_id_job ON job(result_id)",

"DROP INDEX i_saas_result_id_job"

),

]

Conclusion

Adopting a new technology in a company’s stack can be challenging and a long process. I was a bit skeptical about the capacity of CockroachDB, which is fundamentally distributed, to replace PostgreSQL without too much efforts. It turns out this works quite well! The complexity of database distribution is mostly hidden and you won’t need to care about it as a developer if you don’t do anything too complex.

However, for specific usage, like our data-intense needs, some deep-dives into the specificities of CockroachDB as a distributed database are needed to adjust your service. Second-looks and manual verifications are needed to make sure what you expect the framework to do is actually done with CockroachDB as well.

But the documentation is well built and answers all the concerns we had so far! CockroachDB is not as wide spreaded as PostgreSQL, and some differences here and there make the compatibility not straightforward. But going from PostgreSQL to a distributed database has been way smoother than I expected, and it is definitely worth the burden. I am convinced CockroachDB usage will keep growing as it answers well scalability issues that many tech companies face with more established SQL databases.

I am Mathieu Lamiot, a France-based tech-enthusiast engineer passionate about system design and bringing brilliant individuals together as a team. I dedicate myself to Tech & Engineering management, leading teams to make an impact, deliver value, and accomplish great things.