Table of Contents

A real-life example

I recently had the opportunity to dive back into hands-on coding for my teams—something I hadn’t done for several months while focused on other leadership responsibilities. I knocked out a few quick PRs, each taking about an hour to implement and test. Simple fixes, well-tested, ready to deliver value to our users: Here, I added a simple retry strategy in case of known temporary errors returned from an API.

- r = requests.post(

- self.base_url + name + ".json",

- data=data_json,

- auth=(self.api_key, self.api_password),

- headers=self.headers,

- )

+ for _ in range(3): # Retry up to 3 times

+ r = requests.post(

+ self.base_url + name + ".json",

+ data=data_json,

+ auth=(self.api_key, self.api_password),

+ headers=self.headers,

+ )

+

+ # Retry logic for specific error codes

+ retry_needed = False

+ if r.status_code == 422:

+ try:

+ error_data = r.json()

+ errors = error_data.get("errors", {})

+ error_message = errors.get("error", "")

+ if "record not found" in error_message:

+ retry_needed = True

+ except json.JSONDecodeError:

+ pass

+ if not retry_needed:

+ break # Not an expected retry case, exit loop

+ time.sleep(1)

+ return self.resp_handler(r)Then came the waiting game.

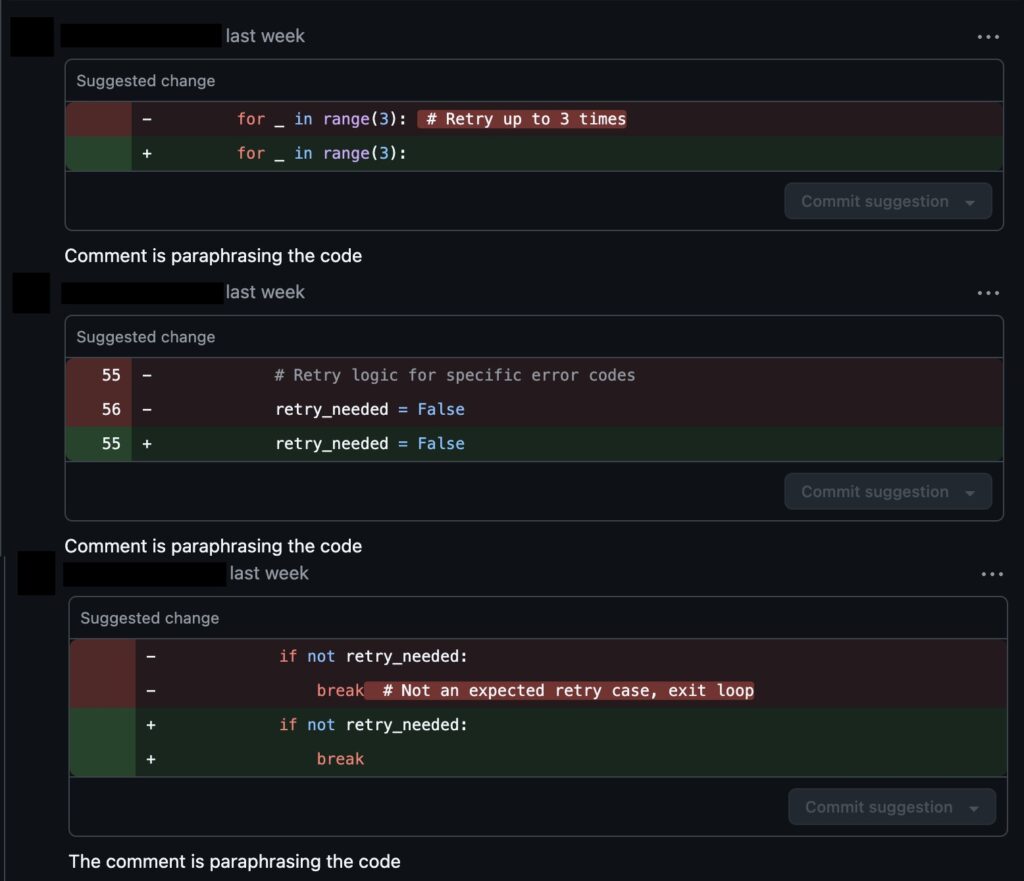

More than 24 hours passed before my first PR received a review. The feedback that finally arrived was about the comments. This was a point worth mentioning, or discussing, but turned out to be mostly personal preferences, and nothing that would fundamentally change the functionality or prevent the code from working correctly. Meanwhile, end users were waiting for these fixes, blocked by what felt like an unnecessarily slow process.

After addressing the feedback, I submitted the changes and… waited again for another review cycle. What should have been a same-day deployment stretched into nearly a week. The code worked, the tests passed, and users needed the value, but our review process had become a bottleneck that prioritized perfection over progress.

This experience reminded me why I wanted to write this deep dive into code review processes. As engineering leaders, we often focus on the technical aspects of reviews while overlooking their impact on team velocity and morale, especially in remote environments where every delay is amplified. The key isn’t just knowing what to fix—it’s getting your team to embrace the changes. That’s why the most effective approach starts with your sprint retrospective, not a new policy document.

TL;DR: Code Review Problems & Solutions

Common Issues:

- PRs sitting in review queues for 24+ hours, turning simple fixes into week-long delays

- Large PRs (500+ lines) getting rubber-stamped without proper review

- Endless back-and-forth over trivial issues like typos or personal style preferences

- Architectural debates happening during code review instead of upfront planning

Battle-Tested Solutions:

- 24-hour SLA with Slack alerts and clear PR owner responsibility

- Reviewer autonomy to fix small issues directly (typos, comments, formatting)

- Follow-up vs. blocking framework to prioritize what actually needs immediate attention

- Technical grooming before coding to prevent design debates during review

- 50-line PR sweet spot for optimal speed and quality (backed by research)

- Retrospective-driven implementation for collaborative team buy-in

Why Code Reviews Matter (Even When They’re Painful)

Knowledge sharing is perhaps the most undervalued benefit of code reviews. A recent example from our team perfectly illustrates this: a new teammate asked me for courses or documentation to learn about our large, complex codebase. We don’t have comprehensive documentation—like many growing companies, our knowledge lives primarily in the code itself.

Instead of pointing them to random code exploration, I oriented them toward our code reviews. Even if they couldn’t meaningfully review the technical implementation yet, reading and understanding PRs provided a structured entry point into the codebase. PRs show what’s actively changing, what patterns we’re following, and what areas are most relevant to current work. Reading code randomly can be a huge waste of time—some areas are unchanged and untouched for years, making them irrelevant for learning current practices.

Challenging teammates with fresh perspectives catches blind spots that the original author missed. This isn’t about catching syntax errors—modern IDEs handle that. It’s about questioning assumptions, suggesting better approaches, and ensuring the solution aligns with broader architectural goals.

Fostering quality goes beyond finding bugs. Code reviews establish and reinforce coding standards, encourage documentation, and create a culture where craftsmanship matters.

The challenge is that these benefits only materialize when code reviews are done thoughtfully and efficiently. When the process breaks down, teams experience all the friction with none of the value—exactly what happened in my recent experience.

When Code Reviews Go Wrong: The Remote Team Reality

The experience I described in the introduction illustrates a broader pattern affecting many remote teams: small changes getting bogged down in endless back-and-forth while large, complex changes sail through without proper scrutiny.

I’ve seen large PRs—500, 800, even 1,200 lines of code—get approved with nothing more than “looks good to me” after what appeared to be a cursory glance. These rubber-stamp reviews are arguably worse than no reviews at all. They create a false sense of security while providing none of the actual benefits of peer review.

This creates the worst of both worlds: a slow process that doesn’t actually improve quality. Team morale suffers, velocity plummets, and developers start looking for ways to bypass the process entirely.

Remote work amplifies these problems. The asynchronous nature of remote communication means that every round of feedback adds significant delay. What might have been a five-minute conversation at a whiteboard becomes a multi-day Slack thread. Time zone differences make it worse—your reviewer might not see your response until tomorrow morning.

Battle-Tested Tactics to Transform Your Code Review Process

After experiencing these pain points firsthand, we implemented several changes at group.one that dramatically improved our code review process:

Establish Clear SLAs and Team Agreements

We implemented a simple but powerful rule: all PRs must receive their first review within 24 hours of submission. This isn’t just a suggestion—it’s a team commitment with teeth.

We set up Slack notifications that alert the team when PRs are approaching the 24-hour mark. The notifications include the name of the PR owner—the person who created the PR. As the PR owner, they’re responsible for ensuring their PR moves forward: getting a review, engaging in discussion with reviewers, addressing feedback promptly, and escalating if needed. This creates clear ownership rather than diffusing responsibility across the team.

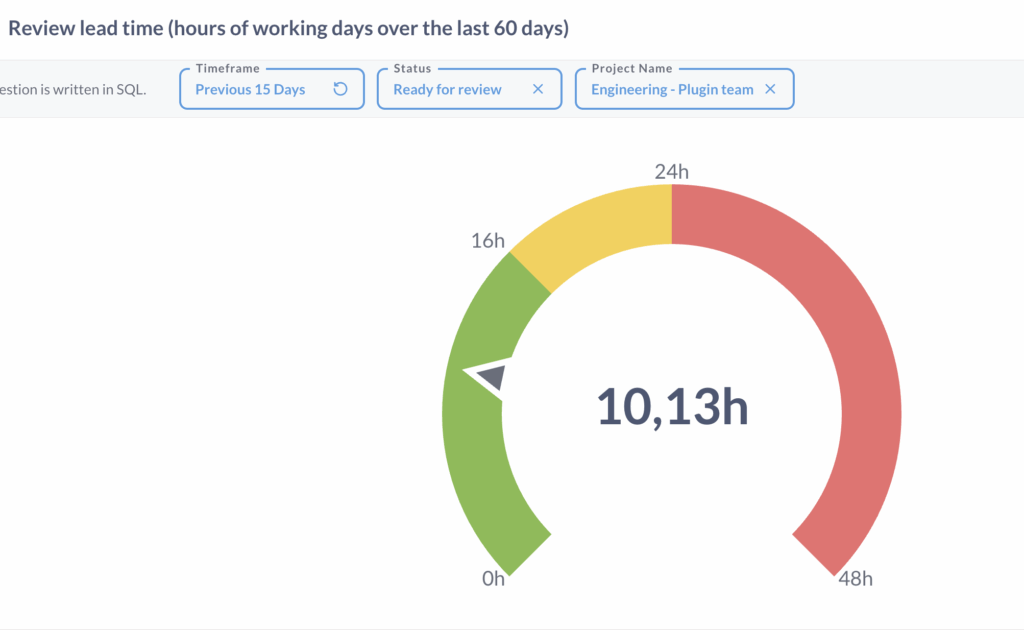

We also made our time-to-first-review metrics publicly accessible to the team. Data is key to improvement, and transparency helps everyone understand where we stand.

Results: Our average time-to-first-review dropped from 2.5 days to 10 hours.

Define Reviewer Autonomy Boundaries

We established clear guidelines about what reviewers can fix directly versus what requires discussion with the author.

Reviewers are empowered to make direct changes for:

- Obvious typos in comments or documentation

- Missing or unclear code comments

- Formatting issues not caught by automated tools

- Simple refactoring that improves readability without changing logic

We also established boundaries around “personal opinion” feedback. If a reviewer suggests a change that’s purely stylistic and doesn’t impact functionality, maintainability, or team standards, the author can push back without justification.

Follow-up vs. Blocking: The Art of Prioritization

We developed a framework to help reviewers decide what requires immediate attention versus what can become a follow-up ticket.

Issues that should block a PR:

- Security vulnerabilities

- Functional bugs

- Violations of established coding standards

- Changes that break existing functionality

- Missing tests for new functionality

Issues that can become follow-up tickets:

- Performance optimizations (unless critical)

- Additional test coverage beyond the minimum

- Refactoring opportunities in adjacent code

- Documentation improvements

- Nice-to-have features or enhancements

Reviewers must be explicit about which category feedback falls into: “this could be optimized—follow-up ticket” or “this optimization is critical for performance—please address before merging.”

Front-load Decisions: The Grooming Advantage

We moved architectural and approach discussions earlier in the development process. Before any significant feature work begins, we hold a technical grooming session where the team discusses the proposed approach, identifies potential challenges, and aligns on the implementation strategy.

Here are examples of templates we use for grooming:

User story grooming template

Scope a solution To implement this feature, we must …

Development steps:

- [ ] Add DB column

- [ ] Create new endpoint

- [ ] Implement business logic

How will this be validated? Consider manual test scenarios and possible automations. Will a specific setup be needed?

Grooming confidence level: Are you sure the proposed solution will work? Have you tested it? Do you foresee any risks or unknowns?

Can be peer-coded: Yes/No

Is a refactor needed in that part of the codebase? Yes, the data layer could be re-written to be more generic, easing future updates. This would make the effort size XS/S/M/L/XL.

Effort estimation: XS/S/M/L/XL

Bug grooming template

Reproduce the problem To reproduce the issue, …

Identify the root cause The issue is that …

Scope a solution To solve the issue, we must …

Development steps:

- [] Add DB column

- [] Create new endpoint

- [] Implement business logic

How will this be validated? Consider manual test scenarios and possible automations. Will a specific setup be needed?

Grooming confidence level: Are you sure the proposed solution will work? Have you tested it? Do you foresee any risks or unknowns?

Can be peer-coded: Yes/No

Is a refactor needed in that part of the codebase? Yes, the data layer could be re-written to be more generic, easing future updates. This would make the effort size XS/S/M/L/XL.

Effort estimation: XS/S/M/L/XL

As a result, reviews can focus on implementation details, code quality, and edge cases rather than fundamental design questions.

The Goldilocks PR: Finding the Right Size

Research from Graphite shows that the ideal PR size is around 50 lines of code. Their analysis revealed that 50-line changes are reviewed and merged ~40% faster than 250-line changes, are 15% less likely to be reverted, and receive 40% more review comments per line changed. This study, and several others you can find online, are loaded with insights about how PR size affects merge time, defect rates, and developers’ engagement.

Strategies for breaking down large features:

- Feature flagging: Merge incomplete features safely behind flags

- Interface-first development: Define and review APIs/schemas before implementation

- Incremental refinement: Series of focused changes that gradually transform code

Implementation Roadmap: Making the Change

Why Retrospectives Beat Top-Down Changes: Most articles about improving code reviews focus on what to change, but ignore how to change. The retrospective approach ensures your team owns the solution rather than having it imposed. When developers participate in identifying problems and designing solutions, adoption rates are significantly higher than mandated process changes.

Use sprint retrospectives to drive change: Raise these concerns during retrospective meetings with specific examples from the previous sprint—PRs that took too long to review, feedback that could have been handled differently, or bottlenecks that affected delivery. When the pain is fresh and well-illustrated, the team is more motivated to change.

Decide on one or two specific actions to try in the next sprint. Example: “Reviewers will fix small issues themselves instead of requesting changes” or “We’ll aim for 24-hour first reviews.”

Next retrospective: Review and iterate. Discuss how the changes worked. What improved? What didn’t work as expected? Use this feedback to refine your approach or try additional tactics.

Success metrics to track:

- Average time from PR submission to first review

- Average number of review cycles per PR

- PR size distribution

- Time from PR submission to merge

From Bottleneck to Accelerator

The transformation of our code review process at group.one didn’t just improve velocity—it improved code quality and team culture. When reviews happen quickly and focus on the right things, developers feel supported rather than blocked.

The compound effect is powerful: better reviews lead to better code, which leads to fewer bugs, which leads to faster development cycles.

If your team is struggling with code review bottlenecks, start by bringing it up in your next retrospective with specific examples. Pick one tactic from this article—maybe the 24-hour SLA or the reviewer autonomy guidelines—and try it for a sprint. Measure the impact, gather feedback, and iterate.

Unlike other process improvements that rely on management mandates, this retrospective-driven approach ensures your team becomes co-creators of the solution. That collaborative ownership is what transforms temporary fixes into lasting cultural change.

Remember, the best code review process is one that your team actually follows and believes in. Focus on removing friction, setting clear expectations, and creating a culture where quality and velocity reinforce each other rather than compete.

I am Mathieu Lamiot, a France-based tech-enthusiast engineer passionate about system design and bringing brilliant individuals together as a team. I dedicate myself to Tech & Engineering management, leading teams to make an impact, deliver value, and accomplish great things.